Computer science: The history of computer development

(Image: Shutterstock)

Computer science continues to break boundaries today. Wearable electronic devices, self-driving cars, and video communications shape our lives on a daily basis.

The history of computer science provides important context for today’s innovations. Thanks to computer science, we landed a person on the moon, connected the world with the internet, and put a portable computing device in six billion hands.

In 1961, George Forsythe came up with the term “computer science.” Forsythe defined the field as programming theory, data processing, numerical analysis, and computer systems design. Only a year later, the first university computer science department was established. And Forsythe went on to found the computer science department at Stanford.

Looking back at the development of computers and computer science offers valuable context for today’s computer science professionals.

Milestones in the history of computer science

In the 1840s, Ada Lovelace became known as the first computer programmer when she described an operational sequence for machine-based problem solving. Since then, computer technology has taken off. A look back at the history of computer science shows the field’s many critical developments, from the invention of punch cards to the transistor, computer chip, and personal computer.

1890: Herman Hollerith designs a punch card system to calculate the US Census

The US Census had to collect records from millions of Americans. To manage all that data, Herman Hollerith developed a new system to crunch the numbers. His punch card system became an early predecessor of the same method computers use. Hollerith used electricity to tabulate the census numbers. Instead of taking ten years to count by hand, the Census Bureau was able to take stock of America in one year.

1936: Alan Turing develops the Turing Machine

Computational philosopher Alan Turing came up with a new device in 1936: the Turing Machine. The computational device, which Turing called an “automatic machine,” calculated numbers. In doing so, Turing helped found computational science and the field of theoretical computer science.

1939: Hewlett-Packard is founded

Hewlett-Packard had humble beginnings in 1939 when friends David Packard and William Hewlett decided the order of their names in the company brand with a coin toss. The company originally created an oscillator machine used for Disney’s Fantasia. Later, it turned into a printing and computing powerhouse.

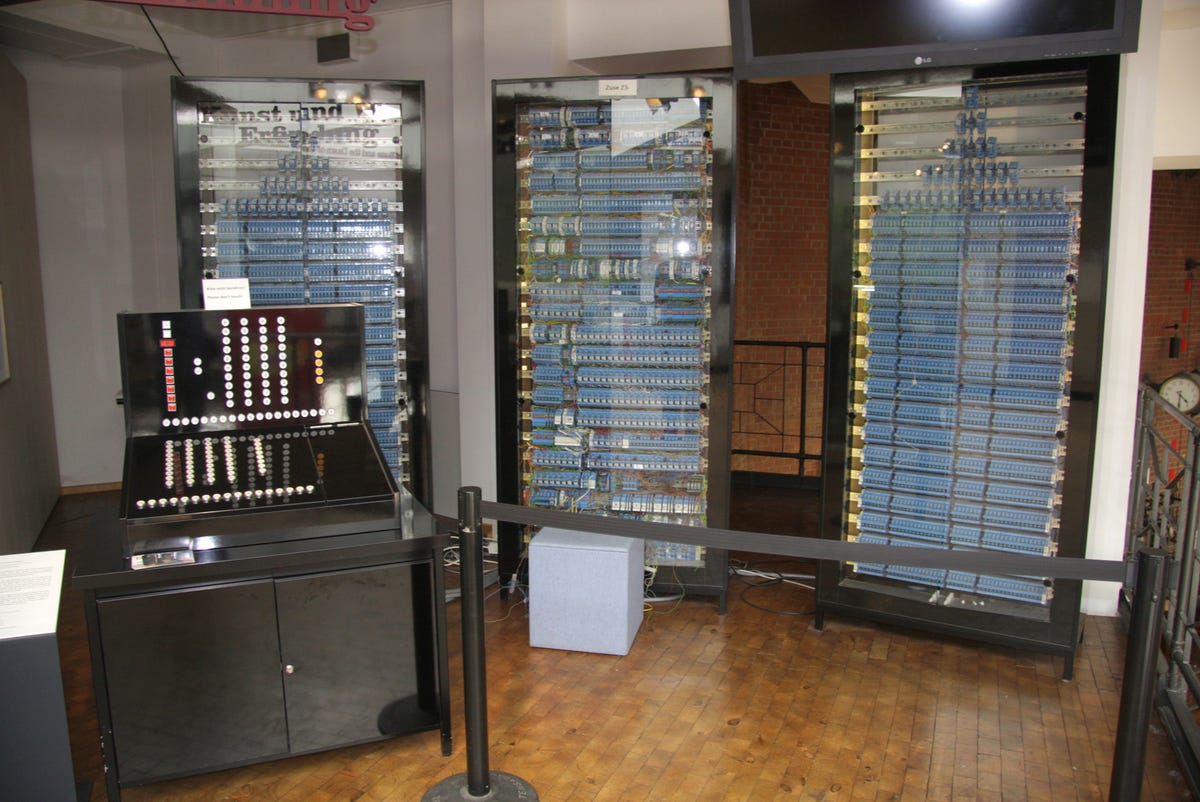

1941: Konrad Zuse assembles the Z3 electronic computer

World War II represented a major leap forward for computing technology. Around the world, countries invested money in developing computing machines. In Germany, Konrad Zuse created the Z3 electronic computer. It was the first programmable computing machine ever built. The Z3 could store 64 numbers in its memory.

1943: John Mauchly and J. Presper Eckert build the Electronic Numerical Integrator and Calculator (ENIAC)

The ENIAC computer was the size of a large room — and it required programmers to connect wires manually to run calculations. The ENIAC boasted 18,000 vacuum tubes and 6,000 switches. The US hoped to use the machine to determine rocket trajectories during the War, but the 30-ton machine was so enormous that it took until 1945 to boot it up.

1947: Bell Telephone Laboratories invents transistors

Transistors magnify the power of electronics. And they came out of the Bell Telephone laboratories in 1947. Three physicians developed the new technology: William Shockley, John Bardeen, and Walter Brattain. The men received the Nobel Prize for their invention, which changed the course of the electronics industry.

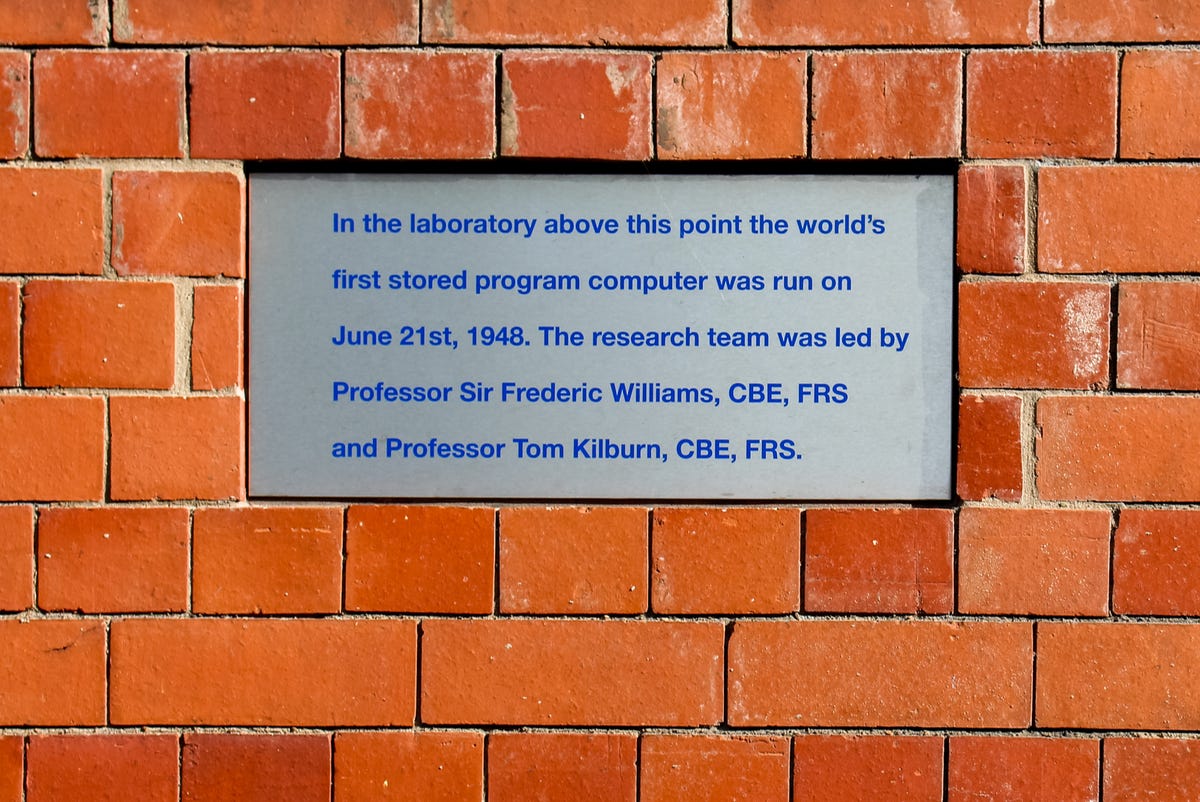

1948: Tom Kilburn’s computer program is the first to run on a computer

For years, computer programmers had to manually program machines by moving wires between vacuum tubes, until Tom Kilburn created a computer program stored inside the computer. Thanks to his computer program, a 1948 computing machine could store 2048 bits of information for several hours.

1953: Grace Hopper develops the first computer language, COBOL

Computer hardware predates computer software. But software took a major leap forward when Grace Hopper developed COBOL, the first computer language. Short for “common business-oriented language,” COBOL taught computers to speak a standard language. Hopper, a Navy rear admiral, took computers a giant leap forward.

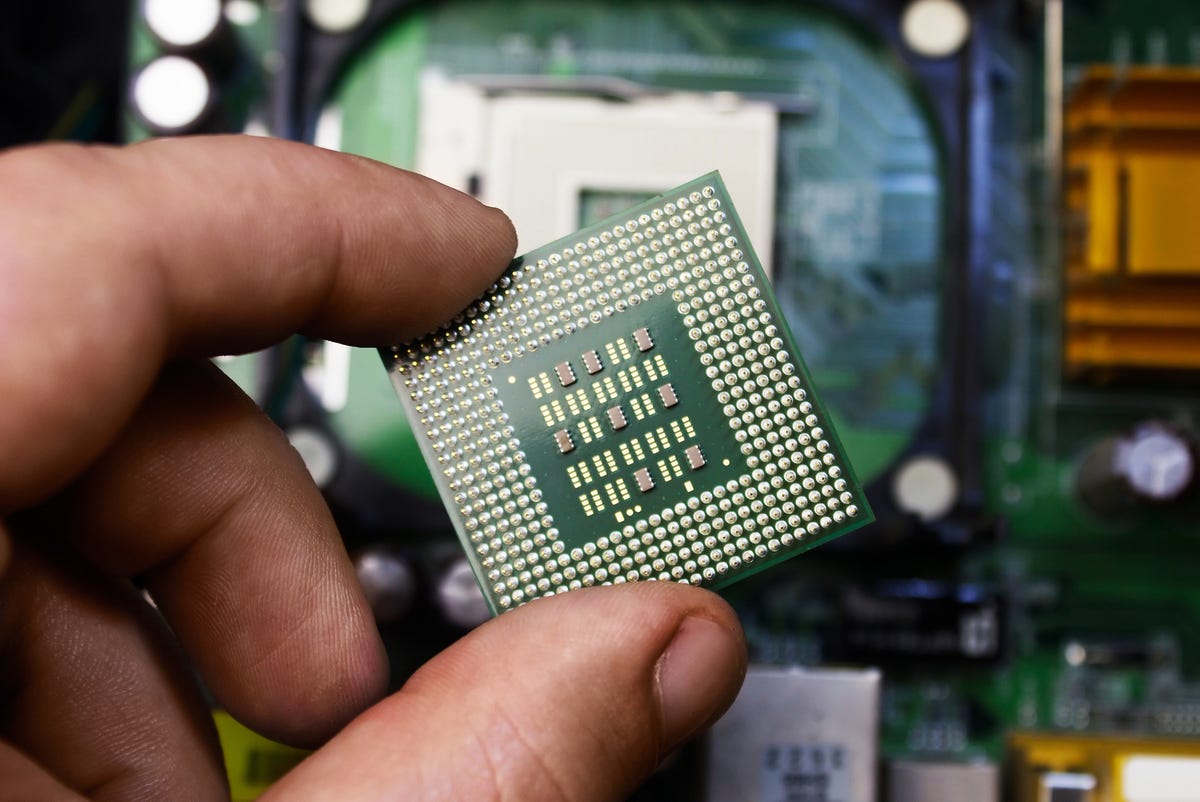

1958: Jack Kilby and Robert Noyce invent the computer chip

Working independently, Jack Kilby and Robert Noyce came up with an idea: an integrated circuit that could store information. The microchip, as it became known, used the transistor as a jumping off point to create an entire computer chip made from silicone. The computer chip opened the door to many important advances.

1962: First computer science department formed at Purdue University

As an academic discipline, computer science ranks as fairly new. In 1962, Purdue University opened the very first computer science department. The first computer science majors used punch card decks, programming flowcharts, and “textbooks” created by the faculty, since none existed.

1964: Douglas Engelbart develops a prototype for the modern computer

The inventor Douglas Engelbart came up with a tool that would shape modern computing: the mouse. The tool would help make computers accessible to millions of users. And that wasn’t Engelbart’s only contribution — he also built a graphics user interface (GUI) that would shape the modern computer.

1971: IBM invents the floppy disk and Xerox invents the laser printer

The floppy disk might be a relic of the past in the 21st century, but it was a major leap forward in 1971 when IBM developed the technology. Capable of storing much more data and making it portable, the floppy disk opened up new frontiers. That same year, Xerox came up with the laser printer, an invention still used in offices around the world.

1974: The first personal computers hit the market

By the 1970s, inventors chased the idea of personal computers. Thanks to microchips and new technologies, computers shrunk in size and price. In 1974, the Altair hit the market. A build-it-yourself kit, the Altair cost $400 and sold thousands of copies. The next year, Paul G. Allen and Bill Gates created a programming language for the Altair and used the money they made to found Microsoft.

1976: Steve Jobs and Steve Wozniak found Apple Computer

Working out of a Silicon Valley garage, Steve Jobs and Steve Wozniak founded Apple Computer in 1976. The new company would produce personal computers and skyrocket to the top spot in the tech industry. Decades later, Apple continues to innovate in personal computing devices.

1980 – Present: Rapid computing inventions and the Dot-com boom

What did computer science history look like in 1980? Few homes had personal computers, which were still quite expensive. Compare that situation to today: In 2020, the average American household had more than ten computing devices.

What changed? For one, computer science technology took some major leaps forward, thanks to new tech companies, demand for devices, and the rise of mobile technology. In the 1990s, the Dot-com boom turned investors into overnight millionaires. Smartphones, artificial intelligence, Bluetooth, self-driving cars, and more represent the recent past and the future of computer science.

Seven impacts of computer science development

It’s hard to fully grasp the impact of computer science development. Thanks to computer science, people around the world can connect instantly, live longer lives, and share their voices. In diverse computer science jobs, tech professionals contribute to society in many ways.

This section looks at how the history of computer science has shaped our present and our future. From fighting climate change to predicting natural disasters, computer science makes a difference.

1. Connects people regardless of location

During the COVID-19 pandemic, millions of Americans suddenly relied on video chat services to connect with their loved ones. Communications-focused computer science disciplines link people around the globe. From virtual communications to streaming technology, these technologies keep people connected.

2. Impacts every aspect of day-to-day life

Millions of Americans wake up every morning thanks to a smartphone alarm, use digital maps to find local restaurants, check their social media profiles to connect with old friends, and search for unique items at online stores. From finding new recipes to checking who rang the doorbell, computer science shapes many choices in our daily lives.

3. Provides security solutions

Cybersecurity goes far beyond protecting data. Information security also keeps airports, public spaces, and governments safe. Computer security solutions keep our online data private while cleaning up after data breaches. Ethical hackers continue to test for weaknesses to protect information.

4. Saves lives

Computer science algorithms make it easier than ever before to predict catastrophic weather and natural disasters. Thanks to early warning systems, people can evacuate before a hurricane touches ground or take shelter when a tsunami might hit. These computer science advances make a major difference by saving lives.

5. Alleviates societal issues

Global issues like climate change, poverty, and sanitation require advanced solutions. Computer science gives us new tools to fight these major issues — and the resources to help individuals advocate for change. Online platforms make it easier for charities to raise money to support their causes, for example.

6. Gives a voice to anyone with computer access

Computer access opens up a whole new world. Thanks to computers, people can learn more about social movements, educate themselves on major issues, and build communities to advocate for change. They can also develop empathy for others. Of course, that same power can be used to alienate and harm, adding an important layer of responsibility for computer scientists developing new tools.

7. Improves healthcare

Electronic medical records, health education resources, and cutting-edge advances in genomics and personalized medicine have revolutionized healthcare — and these shifts will continue to shape the field in the future. Computer science has many medical applications, making it a critical field for promoting health.

In conclusion

Computer science professionals participate in a long legacy of changing the world for the better. Students considering a computer science degree should understand the history of computer science development — including the potential harm that technology can cause. By educating themselves with computer science resources, tech professionals can understand the responsibility their field holds.

By practicing computer science ethically, professionals can make sure the future of tech positively and productively benefits society while also protecting the security, privacy, and equality of individuals.