Computer Science Seniors Develop Cane to be the Eyes of the Visually Impaired

Two recent Trinity College graduates used their computer science know-how to develop a new way to help visually-impaired people navigate safely through their environments.

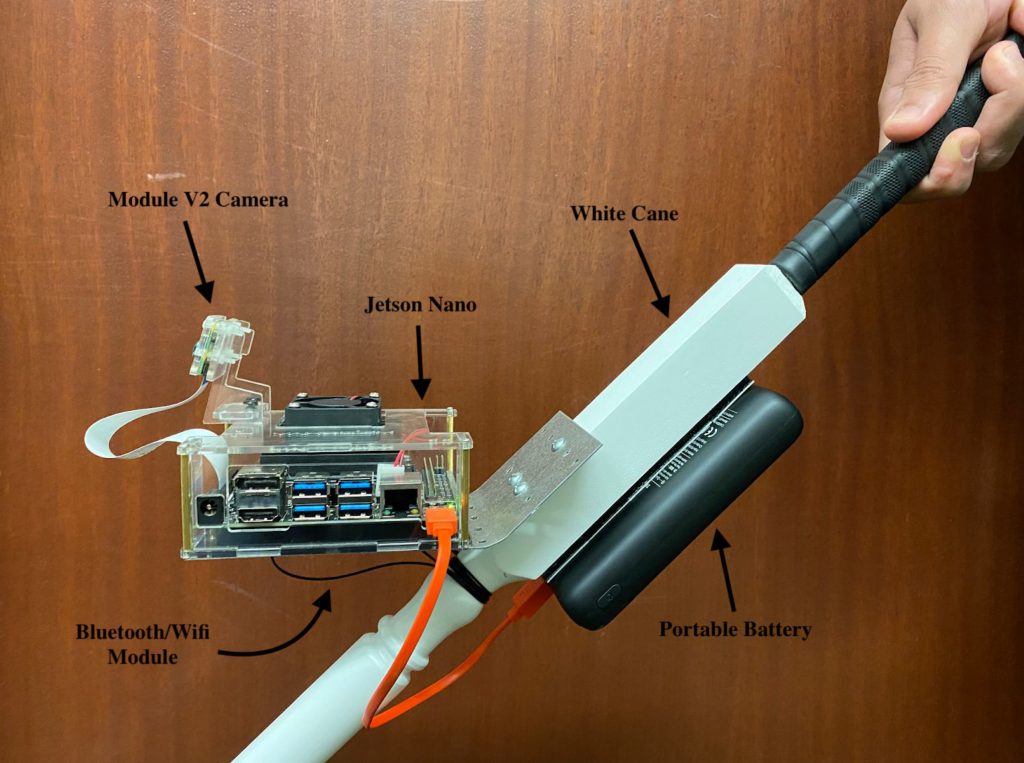

For their senior project, Alisa Levin ’21 and Rahul Mitra ’21 successfully built a cost-effective “Real-Time Object Detection Aid for the Visually Impaired.” Mounted on a white cane, a microcomputer with a camera uses machine learning to determine what’s in the path of a visually-impaired user; that information is announced aloud by the user’s smartphone, which is connected to the microcomputer via Bluetooth. See a brief video demonstration of the project here.

Levin, a computer science major and models and data minor from New York City, and Mitra, a computer science and physics major from Kolkata, India, will each begin working toward a Ph.D. in computer science this fall. Levin recently received a 2021 National Science Foundation Graduate Research Fellowship.

Professor of Computer Science Peter A. Yoon, who was the faculty advisor for Levin and Mitra’s project, said that all senior computer science majors must complete a year-long capstone project in which they design, implement, and test a software system. “This year, for the first time, students were given the option to work in groups, which more closely mimics a real-world software development process,” Yoon said.

Mitra wanted to work with something hardware-related, while Levin wanted to pursue a project that involved machine learning; their project ultimately incorporated both aspects. The two recent graduates completed this project using the programming and long-term project management skills they honed during their computer science courses and research experiences at Trinity.

Yoon said, “Their project required extensive knowledge and skills in virtually all layers of computer software and hardware systems—machine learning, wireless communication, systems programming, and app design, to name a few. Their work was one of the most comprehensive senior capstone projects I have supervised in recent years.”

As they stated in their project abstract, approximately 2.2 billion people worldwide have some form of visual impairment. Levin was inspired to pursue this project in part by Be My Eyes, an application that connects volunteers to visually-impaired users seeking help with tasks like clarifying the color of a sweater or reading the expiration date on a jar. “Rahul and I hashed out the idea for a real-time object detection aid that could help inform visually-impaired individuals of the items in their environment,” Levin said.

Mitra added, “This process uses machine learning on a portable microcomputer with a camera so that individuals are not reliant on the availability of volunteers. We decided to mount our system on a white cane—a device commonly used by low-vision individuals.”

Information from the camera and microcomputer is transferred via Bluetooth to the user’s smartphone, which relays it to the user in audio form, alerting them of any obstacles in front of them. “An example of such an auditory message would be, ‘one car and two people,’” Levin said. “The total cost of the materials we used is $245, which is 41 percent of the cost of the WeWALK cane, a smart cane currently on the market. Our hope is that our device is an accessible alternative to the WeWALK cane, and can further offer unique object detection functionality.”

As Mitra explained, the project’s code-base is publicly available on Github, a code-sharing website. “Any developer could pick up where we left off,” he said. “A lot of good work has gone into this project and we believe keeping our code public will help other developers who want to get involved in this kind of work.”

While Levin and Mitra acknowledge that they were not able to gather feedback from the visually-impaired community, they recognize the importance of having the device tested by individuals who could benefit directly from it. “Their feedback would be invaluable in helping us plan our next steps if we take this project further,” Mitra said. “Accessibility technology is a huge sphere right now. With the growth of high-performance microcomputers, I believe it’s only a matter of time before machine learning is integrated with the well-known white cane on a large scale.”

Levin added, “We hope this is a good step toward a machine learning-enhanced tool that could aid visually-impaired individuals in navigating busy environments.”

Levin and Mitra received the Travelers Senior Research Award for their work, which recognizes an outstanding senior project demonstrating technical maturity, completeness, and relevance. “It is an extraordinary accomplishment for undergraduate students,” Yoon said of their project.

“Working with Prof. Yoon was a fantastic experience,” Mitra said. “We met him bi-weekly and he always brought unique perspectives to problems we ran into during the course of our project. I’ve always enjoyed his ‘learn-by-doing’ philosophy in the classroom. Conducting a senior project under him was similar in that he always suggested new approaches we could take. Prof. Yoon was especially instrumental in helping us put the entire system together in a user-friendly manner.”

After graduating from Trinity, Mitra will conduct research in computer graphics while pursuing a Ph.D. in computer science. “The research opportunities provided by Trinity convinced me that graduate school is the right decision for me,” he said. “The knowledge gained from Prof. Yoon’s programming courses was a key factor in influencing my decision to pursue graduate studies in computer science.”

As Levin pursues a Ph.D. in computer science, she plans to continue to combine her interests in machine learning and assistive technologies. “I’m very grateful to all of the wonderful faculty in the Computer Science Department for supporting us over these past four years,” she said.

Read more about senior projects by engineering and computer science majors here.